RAP Dataset v2.0

RAP dataset v2.0 aims to promote the research on pedestrian retrieval with various of queries, including pedestrian

attributes and person images. The overall types of annotations are shown in Table 1.

Pedestrian Attribute

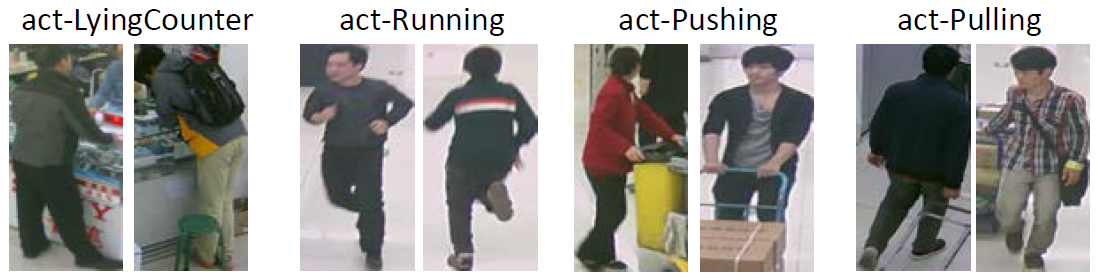

The RAP dataset v2.0 contains 69 binary attributes and 3 multi-class attributes, such as gender, backpack, and cloth types. Besides common pedestrian attributes, some attributes are firstly annotated in RAP dataset, such as person actions. Some samples are shown in Figure 1.

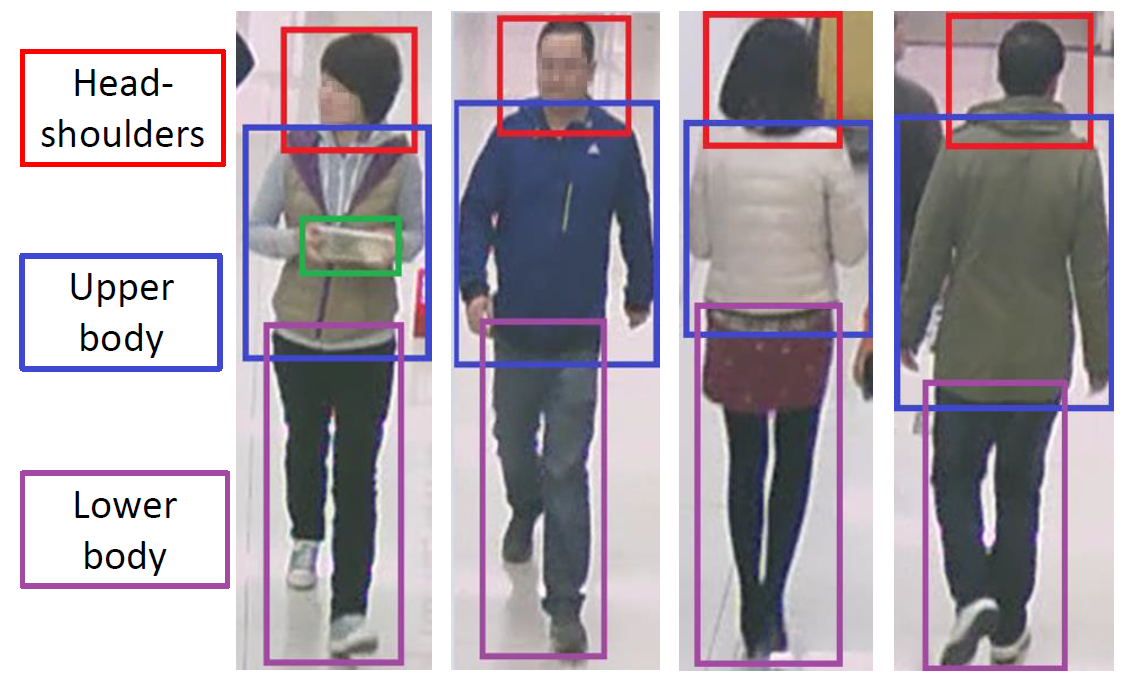

Besides 72 attributes, pedestrian orientations, occlusion patterns, and coarse body parts are also annotated, which are useful for pedestrian related applications. Locations of body parts and accessories are annotated as well. Samples with body part annotations are shown in Figure 2. The green box annotates the location of accessories.

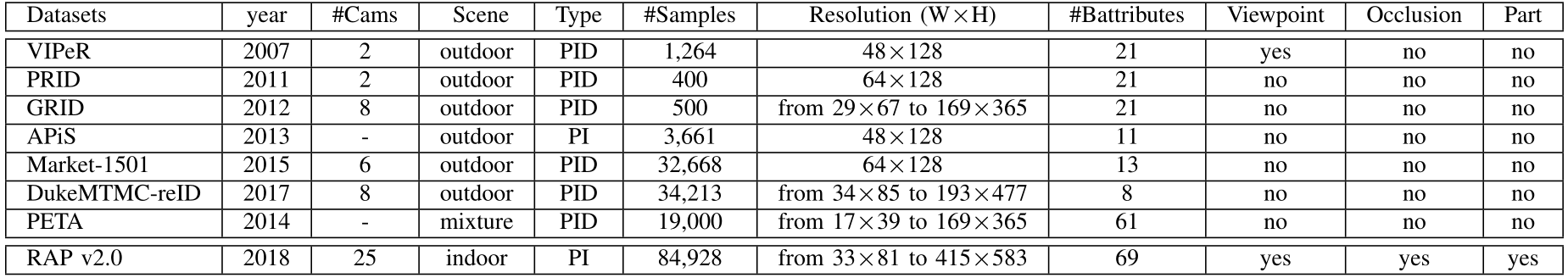

The overall comparison with existing pedestrian attribute datasets is shown in Table 2.

Pedestrian Identity

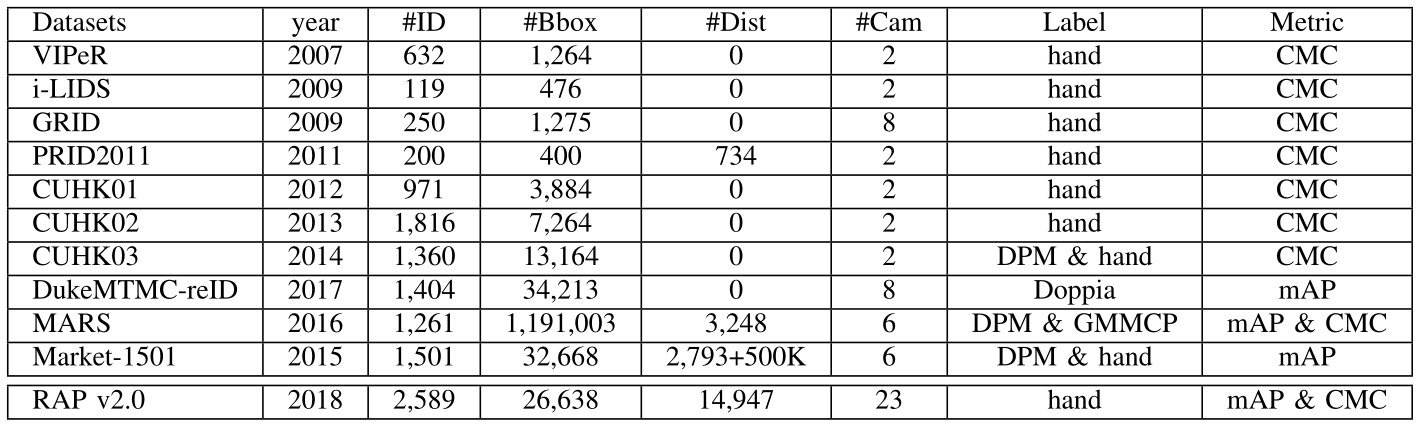

The RAP dataset v2.0 contains 2,589 person identities. Existing person ReID datasets usually only consider a short time period under the assumption that cloth appearances of the same persons are unchanged. Differently, the RAP dataset v2.0 is collected during a long time and there are 598 person identities that appear more than one day. The cloth appearances of these identities are not exactly the same during different days. Samples with cross-day person identity annotations are shown in Figure 3. Based on this dataset, researchers can develop more efficient algorithms for long-term person retrieval.

The overall comparison with existing person ReID datasets is shown in Table 3.

| Class | Attribute | |

|---|---|---|

| Spatial-Temporal | Time, sceneID, image position, bounding box of body/head-shoulder/upper-body/lower-body/accessories. | |

| Whole | Gender, age, body shape, role. | |

| Accessories | Backpack, single shoulder bag, handbag, plastic bag, paper bag etc. | |

| Posture,Actions | Viewpoints, telephoning, gathering, talking, pushing, carrying etc. | |

| Occlusions | Occluded parts, occlusion types. | |

| Parts | head | Hair style, hair color, hat, glasses. |

| upper | Clothes style, clothes color. | |

| Lower | Clothes style, clothes color, footware style, footware color. | |

The RAP dataset v2.0 contains 69 binary attributes and 3 multi-class attributes, such as gender, backpack, and cloth types. Besides common pedestrian attributes, some attributes are firstly annotated in RAP dataset, such as person actions. Some samples are shown in Figure 1.

Figure 1: Samples with action attribute annotations.

Besides 72 attributes, pedestrian orientations, occlusion patterns, and coarse body parts are also annotated, which are useful for pedestrian related applications. Locations of body parts and accessories are annotated as well. Samples with body part annotations are shown in Figure 2. The green box annotates the location of accessories.

Figure 2: Samples with body part annotations.

The overall comparison with existing pedestrian attribute datasets is shown in Table 2.

Table 2: Comparison with existing pedestrian attribute datasets.

The RAP dataset v2.0 contains 2,589 person identities. Existing person ReID datasets usually only consider a short time period under the assumption that cloth appearances of the same persons are unchanged. Differently, the RAP dataset v2.0 is collected during a long time and there are 598 person identities that appear more than one day. The cloth appearances of these identities are not exactly the same during different days. Samples with cross-day person identity annotations are shown in Figure 3. Based on this dataset, researchers can develop more efficient algorithms for long-term person retrieval.

Figure 3: Samples with cross-day person identity annotations.

The overall comparison with existing person ReID datasets is shown in Table 3.

Table 3: Comparison with existing person ReID datasets.

Download

If you want to use the RAP dataset for scientific research only, please fill the aggrement here .

The related codes are released. Recently a strong baseline has also been released.

The related codes are released. Recently a strong baseline has also been released.

Citation

Please kindly cite our work if it helps your research: